UltraSoundLog: Location/Person-aware Sound Log System for Museums

Abstract

For enhancing the experience in a museum or exhibition, a voice recorder is useful for recording users' comments and providing explanations for the exhibitions. However, to utilize the sound log after the event, the voice recorder should attach appropriate tags to the sound data for extracting intended data from long-term sound log. In a previous study, we have proposed a method for embedding ultrasonic ID to sound log, and evaluated in a laboratory environment. In this study, we actually used the proposed method during a real event, and we confirmed that the wearing position of the microphone affects the accuracy of ultrasonic ID recognition because the acquired volume of ultrasound is different at each position. We propose a new method for recognizing the position of a microphone to improve the accuracy of ID recognition, and we actually use the improved method at another real event.

Assumed Environment

We simulate a situation in which we use our system at an exhibition such as in a museum or gallery, as shown in right figure. Each showpiece or presenter has a unique ultrasonic ID, and the users record sound. Tags are attached to a sound log by ultrasonic ID so that users can extract the intended data from the long-term sound log. We use commercial devices (smartphones and PCs) as ultrasonic transmitters and receivers.

Application

We implement our proposed method in the application.

This application enhances an experience in an exhibition or museum by having a user listen to the comments or explanations of the showpieces after the event.

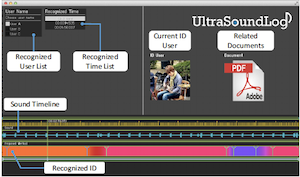

Right figure shows a screenshot of the application. The bar in the middle of the figure shows the timeline of the sound log.

The color bar under the timeline represents the recognized IDs and period. The color corresponds to each ID. In this figure, orange, pink, and purple respectively mean ID 1, ID 2, and ID 3. This color bar helps the user to search and play intended sound data.

Moreover, all recognized user names are listed in the upper left. The application user can also search the recognized time of an ID from the list and jump to the corresponding position on the timeline by just clicking the recognized time list.

The application shows some user information in the upper right such as the ID user's profile and document that corresponds to the current ID in the timeline. The application user can access related information about ID users by just clicking these pictures or icons.

This information is expected to enhance the user experience after the event.

We implement our proposed method in the application.

This application enhances an experience in an exhibition or museum by having a user listen to the comments or explanations of the showpieces after the event.

Right figure shows a screenshot of the application. The bar in the middle of the figure shows the timeline of the sound log.

The color bar under the timeline represents the recognized IDs and period. The color corresponds to each ID. In this figure, orange, pink, and purple respectively mean ID 1, ID 2, and ID 3. This color bar helps the user to search and play intended sound data.

Moreover, all recognized user names are listed in the upper left. The application user can also search the recognized time of an ID from the list and jump to the corresponding position on the timeline by just clicking the recognized time list.

The application shows some user information in the upper right such as the ID user's profile and document that corresponds to the current ID in the timeline. The application user can access related information about ID users by just clicking these pictures or icons.

This information is expected to enhance the user experience after the event.