動作・人物・場所情報の超音波を用いた音声データへの埋め込み手法

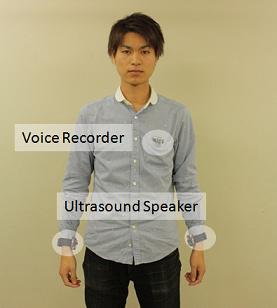

ウェアラブルコンピューティング環境では,装着型センサを使った状況認識に注目が集まっている. 一般的に用いられるセンサは加速度センサやマイクだが,前者は複数のセンサのデータを統合するために 通信を行う必要があり,後者は音のみに頼っているため実際にそのユーザが関係している音なのかが分か らない.そこで本研究では,超音波によってユーザの行動,周囲にいる人,現在居る場所などの情報を取得 し,ボイスレコーダなどの音声記録に埋め込む手法を提案する.ユーザはマイクと超音波を発する小型ス ピーカを装着し,これらの距離を表す音量の変化と,ジェスチャの速度を示すドップラー効果を利用して ジェスチャを認識する.また,環境や人に超音波ID を発信する小型スピーカを装着することで,ユーザが どこにいたか,近くに誰がいたかという情報も同時に記録する.これにより,会話音等の環境音,ジェス チャ,ユーザのいた場所,会った人物のデータすべてがマイクのみで記録できる.提案手法では他者によ る環境音が無い場合,平均86.6%の精度で認識でき,他者から発せられる環境音がある場合,平均64.7%の 精度で認識できた.

A Method for Embedding Context to Sound-based Life Log

Wearable computing technologies are attracting a great deal of attention on context-aware systems. They recognize user context by using wearable sensors. Though conventional context-aware systems use accelerometers or microphones, the former requires wearing many sensors and a storage such as PC for data storing, and the latter cannot recognize complex user motions. In this paper, we propose an activity and context recognition method where the user carries a neck-worn receiver comprising a microphone, and small speakers on his/her wrists that generate ultrasounds. The system recognizes gestures on the basis of the volume of the received sound and the Doppler effect. The former indicates the distance between the neck and wrists, and the latter indicates the speed of motions. We combine the gesture recognition by using ultrasound and conventional MFCC-based environmental-context recognition to recog- nize complex contexts from the recorded sound. Thus, our approach substitutes the wired or wireless communication typically required in body area motion sensing networks by ultrasounds. Our system also recognizes the place where the user is in and the people who are near the user by ID signals generated from speakers placed in rooms and on peo- ple. The strength of the approach is that, for offline recognition, a simple audio recorder can be used for the receiver. Contexts are embedded in the recorded sound all together, and this recorded sound creates a sound-based life log with context information. We evaluate the approach on nine gestures/activities with 10 users. Evaluation results confirmed that when there was no environmental sound generated from other people, the recognition rate was 86.6% on average. When there was environmental sound generated from other people, we compare an approach that selects used feature values depending on a situation against standard approach, which uses feature value of ultrasound and environmental sound. Results for the proposed approach are 64.3%, for the standard approach are 57.3%.