| Menu |

|---|

| Home |

| Research |

| Hardware configuration |

| Developed system |

| People |

| Publication |

| What's new |

| News Update |

|---|

| 2011/10/20 |

| Update 'Publication' |

| 2011/04/07 |

| Update 'Publication' |

| 2010/12/08 |

| Update 'Publication' |

| 2010/03/17 |

| Update 'Hardware' |

Developed system

- Nonintrusive projection for real-time multi-target interactions

- Clutter-aware projection systems based on particle filters

- Self-correcting projector using scale-rotaion invariant feature points

Nonintrusive projection for real-time multi-target interactions

In clutter-aware projection systems, projected contents only appear in an object-free area with no overlap with surrounding objects. This means that although the camera can see the projected content, it does not significantly affect further visual scene analysis. This is because the (geometrically) calibrated pro-cam system is able to locate the projected content in captured images and then eliminate them out from further visual analysis. The elimination is done only at the object-free areas so visual object detection can still perform correctly.

Our system here utilizes many concepts from the previous clutter-aware projection system.

In addition, it allows images to be projected onto any area of the surface without interfering

any visual object detection or scene analysis. We call this concept "nonintrusive projection" whose

projected images are visible to humans but invisible to the synchronized camera.

|

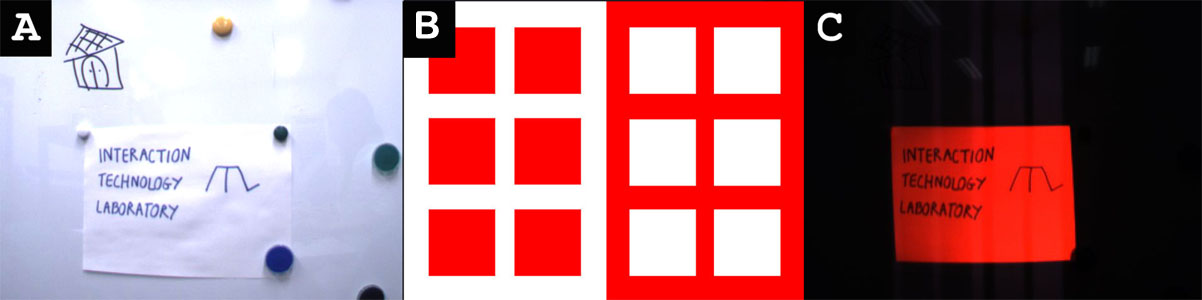

Nonintrusive projection.

(A) is an environment seen by a normal camera. (C)

is environment (A) seen by the synchronized camera when (B) is

being projected. |

Combining the nonintrusive projection with multi-target tracking, our system

can project unique interactions over each detected object in a continuous manner.

Distortion correction is not concerned in this system but the motion sensor

is still used for real-time geometric calibration.

|

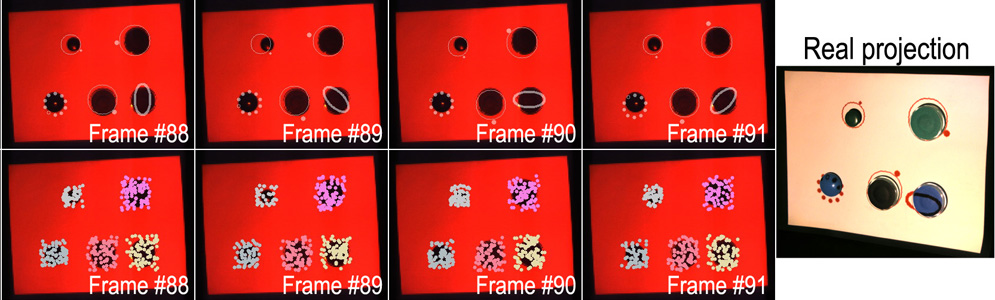

Top images shows the projected animations in camera coordinates (the white animations are drawn here for

simulation purposes).

Bottom images show multi-target tracking

results on camera coordinates. Right image shows the snapshot of the actual surface as seen by human. |

See details of the configuration used in this system here.

Related publication:

- T. Siriborvornratanakul and M. Sugimoto, "A portable projector extended for object-centered real-time interactions", In Proc. of the 6th IEEE European Conference on Visual Media Production (CVMP'09), London, pp.118-126, November 12-13, 2008.

Clutter-aware projection systems based on particle filters

In real world scenarios, it is common to find that nearby surfaces are partly hidden behind objects (e.g., calendars, book shelves or chairs). Therefore, they provide unpredictable shapes of the available projection surface. Because projecting images onto unknown obstructing objects (a.k.a. clutters) causes many problems, previous handheld projection or fixed projection studies tend to avoid this situation and attempt to project images onto a blank surface only.

The idea of our system here is to project an undistorted image at an adaptive location without overlapping with surrounding clutters (if any). By combining appropriate detectors and multi-target tracking using particle filters, the appearance and disappearance of unknown clutters are monitored. As a result, a number of clutters can be tracked efficiently while spurious objects are filtered out. At every time step, the projection target area adapts itself to fit with current clutter-free areas. The biggest undistorted target area is placed on the clutter-free area that is closest to its previous location.

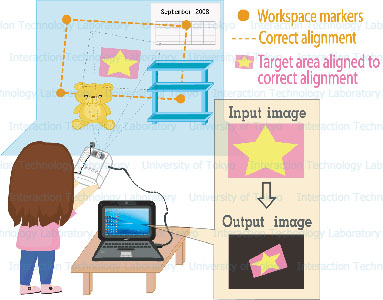

In one system, the world correct alignment is defined by four color markers attached

to a surface. The workspace is limited by the area bounded by the markers and

the pro-cam device is roughly calibrated only at startup. See details of the configuration used in this system here.

|

|

Clutter-aware projection with

four markers |

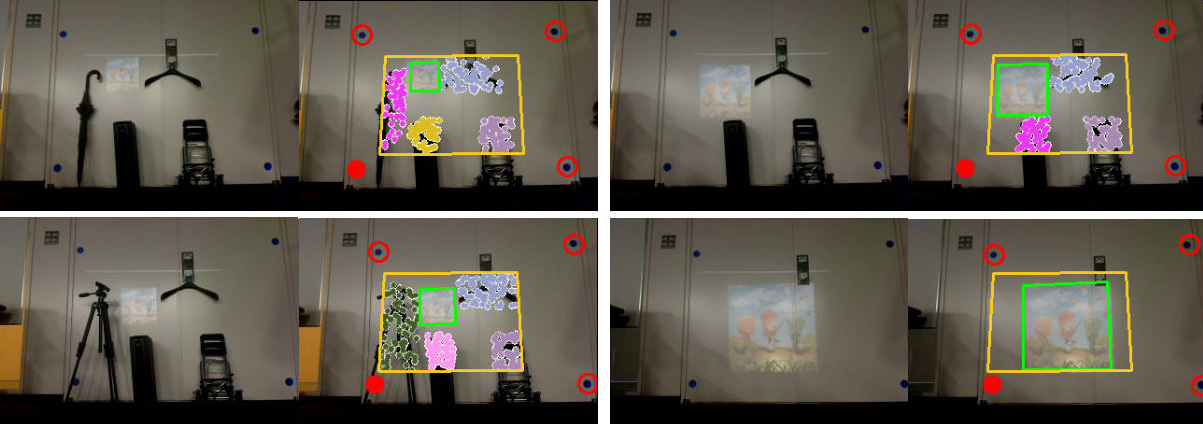

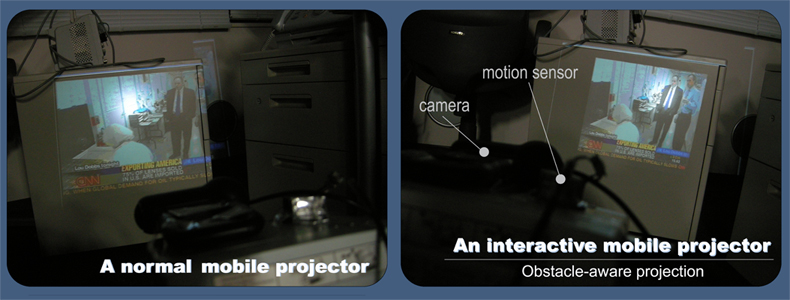

In the other system, the pro-cam device is integrated with a motion sensor.

Because of the motion sensor, the world correct alignment is determined without

any surface's visual information and the pro-cam is geometrically calibrated

in real time. See details of the configuration used in this system here.

|

|

Clutter-aware projection without

any marker |

Related publication:

- T. Siriborvornratanakul and M. Sugimoto, "Clutter-aware Adaptive Projection inside a Dynamic Environment", In Proc. of the 15th ACM Symposium on Virtual Reality Software and Technology (VRST'08), Bordeaux, France, pp.241-242, October 27-29, 2008.

- T. Siriborvornratanakul and M. Sugimoto, "Clutter-aware Dynamic Projection System using a Handheld Projector", In Proc. of the 10th IEEE International Conference on Control Automation Robotics and Vision (ICARCV'08), Hanoi, Vietnam, pp.1271-1276, December 17-20, 2008.

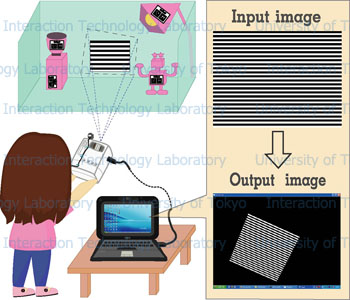

Self-correcting projector using scale-rotaion invariant feature points

In real-life scenarios, nearby surfaces are not always blank but obstructed by some objects. Conventional mobile projector systems tend to neglect this situation and project images onto a blank surface only. Blank surface has an advantage of providing maximum area for projection but it offers no information at all to support visual scene analysis. Because of this reason, previous mobile projector systems chose to attach some fiducial markers on a surface in order to extract necessary information of the surface.

In contrast to conventional projection systems, our system here does not neglect cluttering or obstructing objects but treats them as the additional surface information. Based on the scale-rotation invariant features extracted from the surrounding objects, our system is able to project an undistorted static image onto a cluttered planar surface using a handheld pro-cam device. Although the projector is moved or rotated, projected image will always be shown in an undistorted rectangular shape at a static clutter-free location. Existing clutter-objects act as paper-pins that pin down the projected image at the calculated location.

See details of the configuration used in this system here.

Related publication:

- T. Siriborvornratanakul and M. Sugimoto, "Self-Correcting Handheld Projectors Inside Cluttered Environment", In Proc. of the 11th International Workshop on Advanced Image Technology (IWAIT'08), Hsinchu, Taiwan, January 7-8, 2008.